[i=s] 本帖最后由 玉尺书生 于 2025-2-14 18:29 编辑 [/i]<br />

<br />

准备工作:

先说一下我的环境:

这里飞牛系统版本:0.8.36,我是用的TESLA P4显卡安装的应用中心的NVIDIA-560驱动,其他的NVIDIA显卡可以参考这个教程。

本文参考了陪玩大佬的这篇文章:

https://club.fnnas.com/forum.php?mod=viewthread&tid=14106

首先在ssh中,以root权限运行如下命令,添加apt仓库

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://nvidia.github.io#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://mirrors.ustc.edu.cn#g' | \

tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

执行完命令之后,直接安装这个软件包就ok了。

sudo apt update && sudo apt install nvidia-container-toolkit

代码执行过程中会出现:Do you want to continue? [Y/n]

直接输入y即可。

执行命令如无异常会像下面这样输出:

root@fnOS-device:~# sudo apt update && sudo apt install nvidia-container-toolkit

Get:1 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 InRelease [1,477 B]

Get:2 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 Packages [16.7 kB]

Get:3 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm InRelease [151 kB]

Get:4 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates InRelease [55.4 kB]

Get:5 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports InRelease [59.0 kB]

Get:6 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security InRelease [48.0 kB]

Get:7 https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/debian bookworm InRelease [43.3 kB]

Get:8 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/main amd64 Packages [8,792 kB]

Get:9 https://pkg.ltec.ch/public focal InRelease [2,880 B]

Get:10 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/main Translation-en [6,109 kB]

Get:11 https://pkg.ltec.ch/public focal/main amd64 Packages [428 B]

Get:12 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/contrib amd64 Packages [54.1 kB]

Get:13 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/contrib Translation-en [48.8 kB]

Get:14 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/non-free amd64 Packages [97.3 kB]

Get:15 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/non-free Translation-en [67.0 kB]

Get:16 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/non-free-firmware amd64 Packages [6,240 B]

Get:17 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm/non-free-firmware Translation-en [20.9 kB]

Get:18 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/main amd64 Packages [13.5 kB]

Get:19 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/main Translation-en [16.0 kB]

Get:20 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/contrib amd64 Packages [768 B]

Get:21 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/contrib Translation-en [408 B]

Get:22 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/non-free amd64 Packages [12.8 kB]

Get:23 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/non-free Translation-en [7,744 B]

Get:24 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/non-free-firmware amd64 Packages [616 B]

Get:25 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-updates/non-free-firmware Translation-en [384 B]

Get:26 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/main amd64 Packages [282 kB]

Get:27 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/main Translation-en [235 kB]

Get:28 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/contrib amd64 Packages [5,616 B]

Get:29 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/contrib Translation-en [5,448 B]

Get:30 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/non-free amd64 Packages [11.1 kB]

Get:31 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/non-free Translation-en [7,320 B]

Get:32 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/non-free-firmware amd64 Packages [3,852 B]

Get:33 https://mirrors.tuna.tsinghua.edu.cn/debian bookworm-backports/non-free-firmware Translation-en [2,848 B]

Get:34 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/main amd64 Packages [243 kB]

Get:35 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/main Translation-en [144 kB]

Get:36 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/contrib amd64 Packages [644 B]

Get:37 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/contrib Translation-en [372 B]

Get:38 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/non-free-firmware amd64 Packages [688 B]

Get:39 https://mirrors.tuna.tsinghua.edu.cn/debian-security bookworm-security/non-free-firmware Translation-en [472 B]

Get:40 https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/debian bookworm/stable amd64 Packages [34.9 kB]

Fetched 16.6 MB in 4s (4,133 kB/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

205 packages can be upgraded. Run 'apt list --upgradable' to see them.

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit-base

The following NEW packages will be installed:

libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit nvidia-container-toolkit-base

0 upgraded, 4 newly installed, 0 to remove and 205 not upgraded.

Need to get 5,805 kB of archives.

After this operation, 27.7 MB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 libnvidia-container1 1.17.4-1 [925 kB]

Get:2 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 libnvidia-container-tools 1.17.4-1 [20.2 kB]

Get:3 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 nvidia-container-toolkit-base 1.17.4-1 [3,672 kB]

Get:4 https://mirrors.ustc.edu.cn/libnvidia-container/stable/deb/amd64 nvidia-container-toolkit 1.17.4-1 [1,188 kB]

Fetched 5,805 kB in 1s (5,828 kB/s)

Selecting previously unselected package libnvidia-container1:amd64.

(Reading database ... 76516 files and directories currently installed.)

Preparing to unpack .../libnvidia-container1_1.17.4-1_amd64.deb ...

Unpacking libnvidia-container1:amd64 (1.17.4-1) ...

Selecting previously unselected package libnvidia-container-tools.

Preparing to unpack .../libnvidia-container-tools_1.17.4-1_amd64.deb ...

Unpacking libnvidia-container-tools (1.17.4-1) ...

Selecting previously unselected package nvidia-container-toolkit-base.

Preparing to unpack .../nvidia-container-toolkit-base_1.17.4-1_amd64.deb ...

Unpacking nvidia-container-toolkit-base (1.17.4-1) ...

Selecting previously unselected package nvidia-container-toolkit.

Preparing to unpack .../nvidia-container-toolkit_1.17.4-1_amd64.deb ...

Unpacking nvidia-container-toolkit (1.17.4-1) ...

Setting up nvidia-container-toolkit-base (1.17.4-1) ...

Setting up libnvidia-container1:amd64 (1.17.4-1) ...

Setting up libnvidia-container-tools (1.17.4-1) ...

Setting up nvidia-container-toolkit (1.17.4-1) ...

Processing triggers for libc-bin (2.36-9+deb12u4) ...

ldconfig: /usr/local/lib/libzmq.so.5 is not a symbolic link

修改/etc/docker/daemon.json配置

直接使用nvidia-ctk修改配置即可

nvidia-ctk runtime configure --runtime=docker --config=/etc/docker/daemon.json

一般来说会出现下面这样的回显

root@fnOS-device:~# nvidia-ctk runtime configure --runtime=docker --config=/etc/docker/daemon.json

INFO[0000] Loading config from /etc/docker/daemon.json

INFO[0000] Wrote updated config to /etc/docker/daemon.json

INFO[0000] It is recommended that docker daemon be restarted.

检查一下配置是否已经被修改成功,注意我的镜像源是自己换过的

root@fnOS-device:~# cat /etc/docker/daemon.json

{

"data-root": "/vol1/docker",

"insecure-registries": [

"127.0.0.1:19827"

],

"live-restore": true,

"registry-mirrors": [

"https://docker.1ms.run"

],

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

}

}

检查处理驱动问题

如果你跟我一样用的是应用中心的驱动需要修复

以下代码自己一条一条复制执行。

mv /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.1 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.1.bak

mv /usr/lib/x86_64-linux-gnu/libnvidia-ml.so /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.bak

ln -sf /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.560.28.03 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.1

ln -sf /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.560.28.03 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so

mv /usr/lib/x86_64-linux-gnu/libcuda.so /usr/lib/x86_64-linux-gnu/libcuda.so.bak

mv /usr/lib/x86_64-linux-gnu/libcuda.so.1 /usr/lib/x86_64-linux-gnu/libcuda.so.1.bak

ln -sf /usr/lib/x86_64-linux-gnu/libcuda.so.560.28.03 /usr/lib/x86_64-linux-gnu/libcuda.so

ln -sf /usr/lib/x86_64-linux-gnu/libcuda.so.560.28.03 /usr/lib/x86_64-linux-gnu/libcuda.so.1

检查一下

ls -lh /usr/lib/x86_64-linux-gnu/libnvidia-ml.so*

回显如下:

root@Nas:~# ls -lh /usr/lib/x86_64-linux-gnu/libnvidia-ml.so*

lrwxrwxrwx 1 root root 51 Feb 9 14:35 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so -> /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.560.28.03

lrwxrwxrwx 1 root root 51 Feb 9 14:35 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.1 -> /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.560.28.03

-rwxr-xr-x 1 root root 2.1M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.1.bak

-rwxr-xr-x 1 root root 2.1M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.560.28.03

-rwxr-xr-x 1 root root 2.1M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libnvidia-ml.so.bak

ls -lh /usr/lib/x86_64-linux-gnu/libcuda.so*

回显如下:

root@Nas:~# ls -lh /usr/lib/x86_64-linux-gnu/libcuda.so*

lrwxrwxrwx 1 root root 46 Feb 9 14:39 /usr/lib/x86_64-linux-gnu/libcuda.so -> /usr/lib/x86_64-linux-gnu/libcuda.so.560.28.03

lrwxrwxrwx 1 root root 46 Feb 9 14:39 /usr/lib/x86_64-linux-gnu/libcuda.so.1 -> /usr/lib/x86_64-linux-gnu/libcuda.so.560.28.03

-rwxr-xr-x 1 root root 34M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libcuda.so.1.bak

-rwxr-xr-x 1 root root 34M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libcuda.so.560.28.03

-rwxr-xr-x 1 root root 34M Sep 6 14:48 /usr/lib/x86_64-linux-gnu/libcuda.so.bak

准备工作完成。

开始部署容器:

用docker compose部署ollama:

services:

ollama:

image: ollama/ollama

container_name: ollama

restart: unless-stopped

ports:

- 11434:11434

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

tty: true

volumes:

- ./ollama:/root/.ollama

networks:

- bridge-nas

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

restart: unless-stopped

ports:

- 8080:8080

environment:

- OLLAMA_BASE_URL=http://ollama:11434 #这里的格式http://[容器名]:[ollama端口]

volumes:

- ./open-webui:/app/backend/data

networks:

- bridge-nas

networks:

bridge-nas:

external: false

docker compose怎么部署这里就不细说了,构建好容器就正常运行了。

浏览器输入:http://[NASIP]:8080就可以进入webui,第一次运行webui会提示注册,注册好登陆。

另外这里提一下登陆之后会出现白屏一直没反应,等着就好,这是由于要连接openai的api在没有魔法的情况下很慢。可以在系统设置里关掉openai的api下次在登陆就不需要等这么长时间。

设置方法:点击右上角的用户图标选择设置→管理员设置→外部连接,把openai api关闭并保存。

如何使用

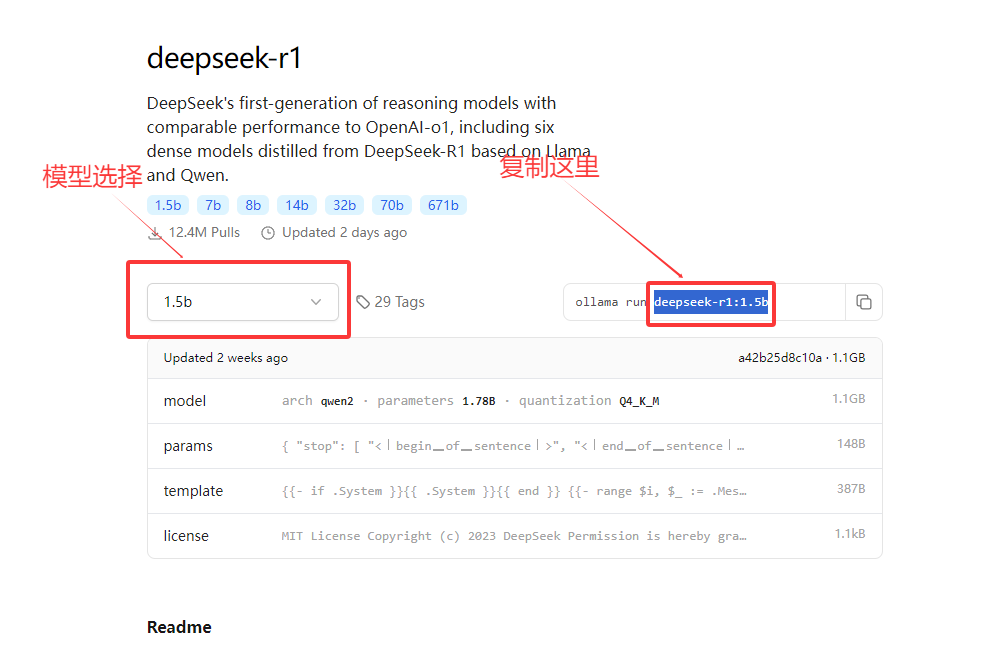

首先访问这个地址:https://ollama.com/library/deepseek-r1

选择好自己要部署的模型

然后回到webui点击选择一个模型→在搜索框里粘贴,点击下面的从ollama.com拉取。